Ever uploaded your selfie to an AI tool only to get back an image resembling a pizza restaurant? We have – and that's exactly how our journey to AI images began. If you're wondering how AI sometimes hilariously misunderstands your photos, keep reading.

AI-generated images have transformed dramatically in recent years. Generative AI models, the computational systems trained on vast datasets of images, translate user inputs (prompts and parameters) into visual outputs. Not long ago, we stressed over models consistently adding six fingers -- nowadays, they're so advanced they could enter human art competitions (and win).

State-of-the-art generative AI models such as Recraft V3 (Red Panda), Reve Image 1.0 (Halfmoon), Imagen 3 (v002), FLUX1.1 [pro], Ideogram v3, Midjourney v6.1, Luma Photon, Playground v3, Stable Diffusion 3.5 and 4o Image Generation represent significant advances over their predecessors by combining improved accuracy, detail retention, and versatility, while lightning-fast ones such as FLUX.1 [schnell] or Nvidia’s SANA offer incredible speed.

So, how did we go from disappointing results to ones that match or exceed expectations?

Our Mission: Turn Your Photo into Any Style -- Fast!

We wanted to make it effortless for users to transform any photo into a captivating image in their choice of artistic styles -- anime, cyberpunk, vintage paintings, or trendy yearbook aesthetics -- with no hassle, just fast, amazing results.

Rough Beginnings: Faces That Didn’t Fit

When generating AI images, we use specific parameters -- settings that guide the AI's behavior. A critical parameter is the prompt, a descriptive instruction that tells the AI what to create. For text-to-image generation, the prompt is purely textual. In our image-to-image scenario, however, we provide two types of prompts: a text prompt describing the desired style and subject, and an image prompt, which is the user's uploaded reference photo.

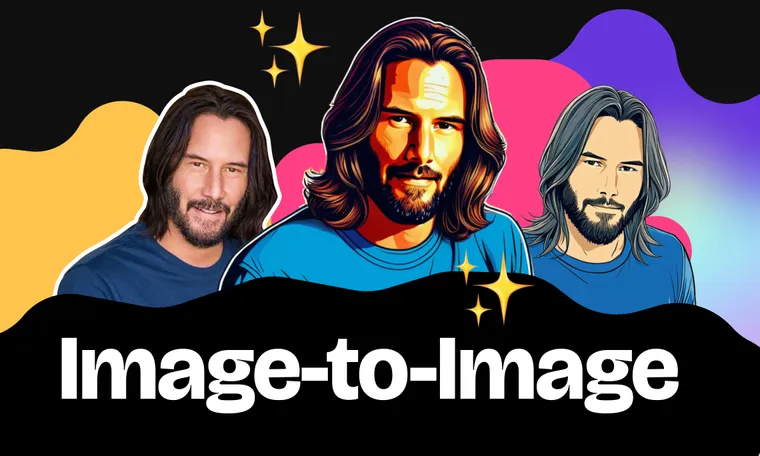

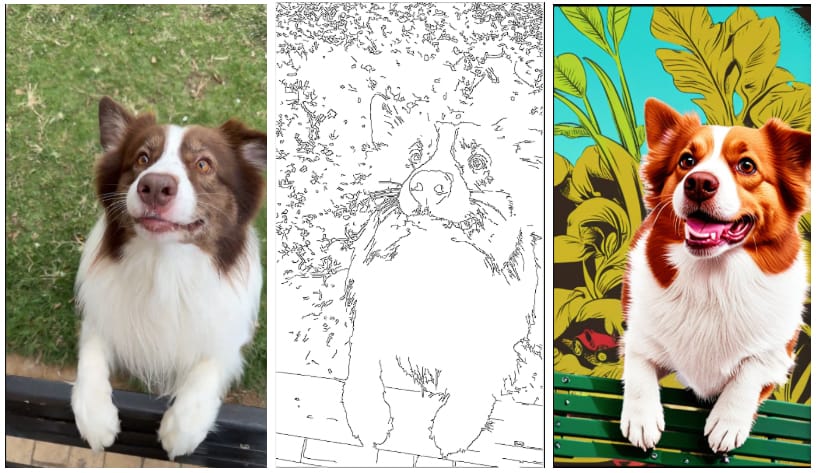

Initially, we built on the open-source generative AI model Stable Diffusion XL (SDXL), combining it with ControlNets, hoping for magic. ControlNet is a tool that extracts visual information such as outlines or depth information from the user's reference image. This visual guidance helps the AI model better match the structure and composition of the reference image, as illustrated in the outline (Canny) map for the dog or the depth map for Keanu Reeves. Despite this additional guidance, human faces still came out distorted and barely recognizable. Oddly, animal results looked fine – maybe because we’re less particular about pets’ faces?

You might wonder how a model knows which colors or gender to generate? To bridge this gap, we tried the CLIP Interrogator, a tool that automatically generates descriptive captions from a reference photo, providing additional textual context for details. For instance, the original generic prompt might look like this:

"'anime artwork illustrating {{CLIP_output}} anime style, highly detailed'"

After running CLIP Interrogator on the reference dog image, it becomes:

"'anime artwork illustrating a brown and white dog standing on a bench. anime style, highly detailed'"

Usually it worked great, but occasionally we’d get wild mistakes – such as generating a pizza by Keanu Reeves' face in the example above because the proposed caption was “a man sitting at a table with a pizza in front of him.”

Therefore, to answer how exactly AI produces these misunderstandings, generative AI models rely entirely on the textual and visual inputs provided. A common example is if the input image is too close-up, a depth ControlNet might produce an unclear depth map, leaving room for interpretation by the AI. Similarly, if the CLIP Interrogator generates an incorrect caption, the AI blindly follows the mistaken prompt.

Adapters: More Hype than Help

Next, we tested IP-adapters and T2I-adapters. IP-adapters, which allow the AI model to incorporate visual details directly from the reference image into the textual prompt, slightly improved faces but degraded overall compositions and introduced artifacts, such as weird hands in the first image below. T2I-adapters, essentially variations of ControlNets, did not appear to offer anything significantly better, as shown in the second image:

LoRAs? Too Slow for Our Scale

Between exploring ControlNet and adapters, we briefly considered using Low-Rank Adaptations (LoRAs). In simpler words, they are retrained (fine-tuned) mini-models of the original AI model on user-provided images. Although the results are significantly better, training LoRAs require the user to upload 3-10 images and the training process takes over a minute. Since we need to provide millions of users with instant, single-image results, LoRAs simply weren’t a viable solution for Zedge.

Photomaker-V1: Almost There

When Photomaker-V1 appeared, we were optimistic – it finally solved our core problem: faces looked great, and you could actually recognize who the person was without needing the reference image. But images lost their structure. Despite rigorous prompt engineering, we kept getting inconsistent scenes, especially with non-face subjects. It was a hit or miss with the background – as you see in the example below, it generated a kitchen out of nowhere:

InstantID: The True Game-Changer

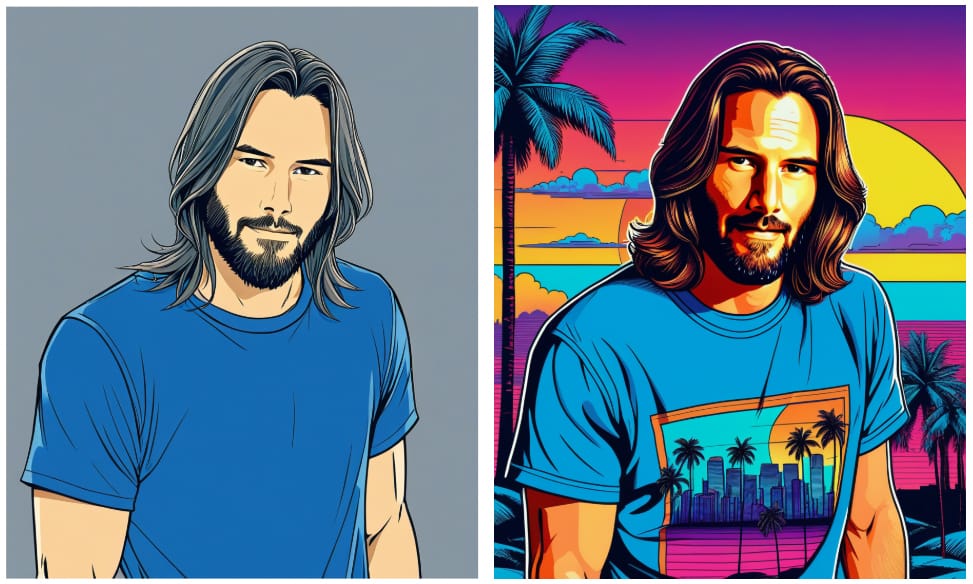

Everything clicked after obtaining a license from the organization InsightFace because their facial detection and recognition models were used by InstantID, and we used InstantID for identity-preserving generation. Faces? Perfect. Composition? Fully controllable thanks to ControlNets being a part of InstantID’s architecture. Each style – whether depth-heavy cyberpunk or pose-driven anime – became predictably stunning.

Expanding Versatility: Meet PuLID

For more stylized visuals such as animations, claymation, and pixel art, we introduced the Pure and Lightning ID method (PuLID), which is also powered by the InsightFace's facial detection and recognition models. Simply put, PuLID is very similar to InstantID, minus the built-in ControlNets as part of its architecture. Thus, it’s creative, quick, and visually exciting.

Adaptive Pipelines: Smart Fallbacks

What happens when a reference image includes multiple faces, or none at all? For those, we seamlessly switch back to trusty ControlNets, avoiding errors and keeping every result coherent. However, group photos remain a challenge. Unfortunately, ControlNets aren't great at generating faces, and both InstantID or PuLID only capture the embeddings of one face. Solving group images remains an area we're actively working to improve.

Constant Improvements

We upgraded from CLIP to a smarter solution: prompting a visual language model (InternVL) to precisely identify details like gender, age, and facial traits without noise. Our roadmap includes regularly integrating new checkpoints and LoRAs tailored specifically for different artistic styles.

Where We Stand: Unmatched Combination of Speed, Quality, and Flexibility

Today, InstantID creates incredible images in just 4.3 seconds, and PuLID even faster at 2.2 seconds (mean active time), whereas ControlNets or PhotoMaker-V1 averaged 11.7 seconds. Our custom pipelines give us speed, flexibility, and affordability -- superior to costly per-image fees charged by competitors.

Reflecting and Looking Ahead

This journey -- from bizarre failures to exceptional image-to-image quality -- was tough, but incredibly rewarding. And we're just getting started.

I challenge you to test out our pipeline -- using prompt images with and without a face -- to see the difference for yourself. After downloading the Zedge app, head to the "AI Generator" tab, select "AI Avatar," and have fun.